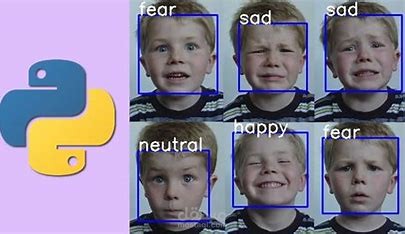

Emotion Detection

تفاصيل العمل

Data Collection:

Gather a dataset of facial images labeled with different emotions (e.g., FER2013, CK+).

Ensure the dataset contains diverse facial expressions across different genders, ages, and ethnicities.

Data Preprocessing:

Preprocess the images by resizing, normalizing, and converting them to grayscale.

Apply data augmentation techniques like rotation, zoom, and flipping to increase the dataset's diversity and robustness.

Model Building:

Use Convolutional Neural Networks (CNNs) to build a deep learning model that can detect and classify emotions.

Train the model on the preprocessed dataset and evaluate its performance using a validation set.

Model Evaluation:

Evaluate the model’s performance using metrics like accuracy, precision, recall, and confusion matrix.

Perform hyperparameter tuning and apply techniques like early stopping and dropout to improve the model's generalization.

Real-Time Emotion Detection:

Integrate the model with a webcam or video feed to perform real-time emotion detection.

Use OpenCV to capture and process live video frames, feeding them into the trained model for prediction.

Deployment:

Deploy the emotion detection model in a web or mobile application for real-time use cases such as customer service, virtual assistants, or mental health monitoring.