Signfy

تفاصيل العمل

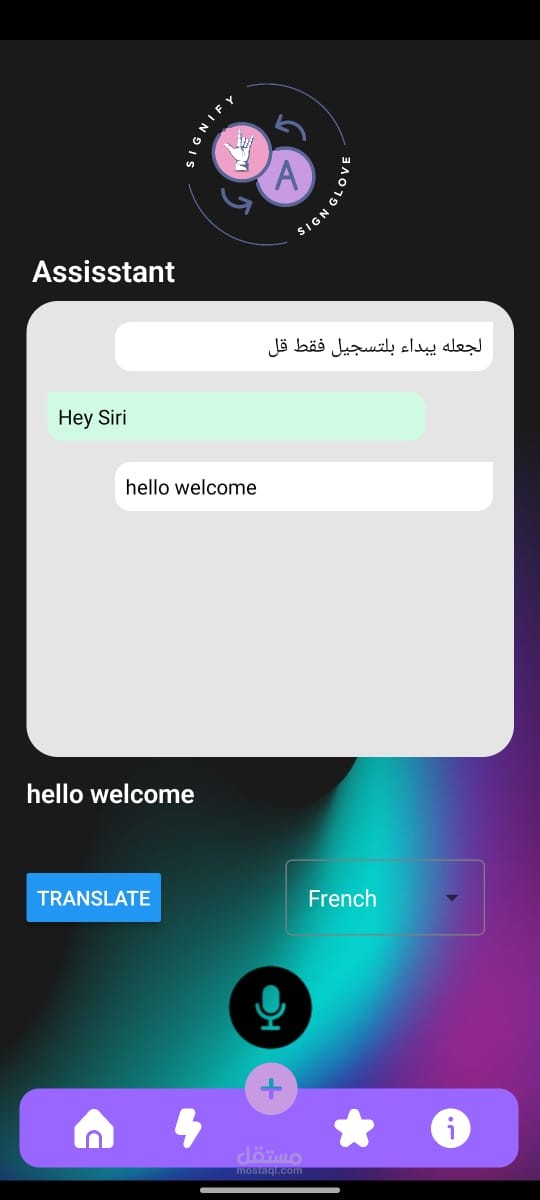

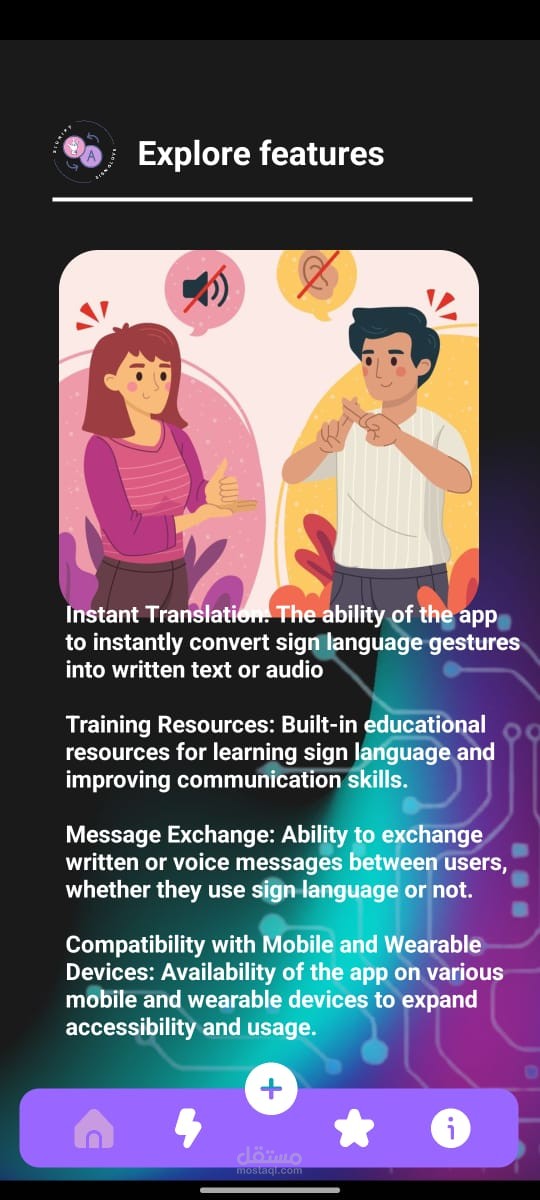

This React Native project is designed to bridge spoken and signed language through voice activation and real-time translation. The app uses the Picovoice/Porcupine hot word detection library to listen for a specific keyword, which triggers automatic voice recording. The recorded speech is then transcribed into text, and using the Liberstate library, the app translates the text into multiple languages.

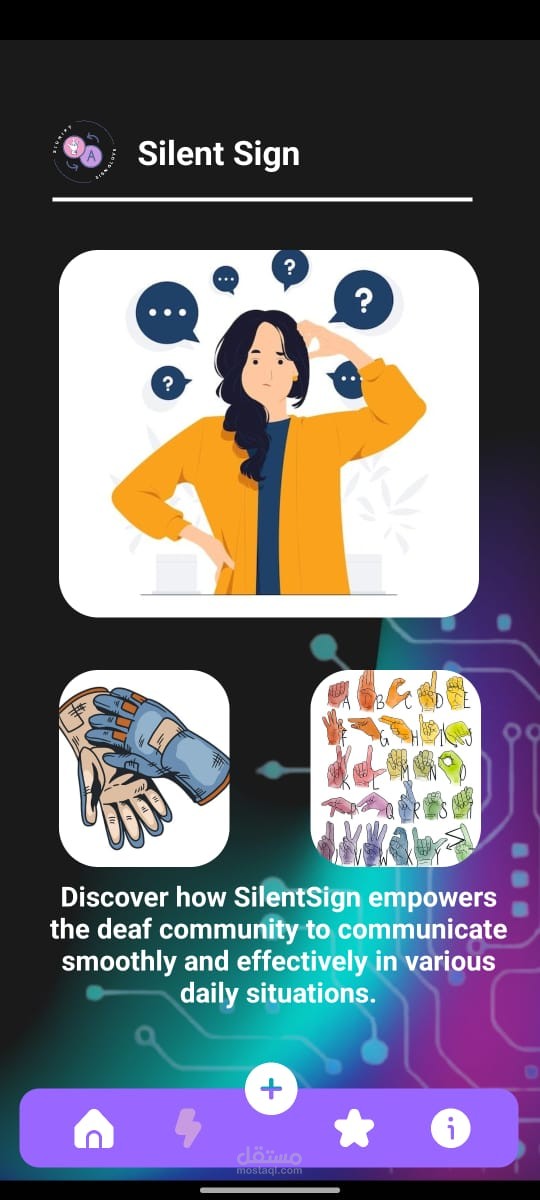

As part of a larger accessibility project, the app is integrated with a sign language glove that has a built-in speaker. When the glove opens, it emits a sound that automatically starts the recording in the app. This enables users to switch between sign language and voice seamlessly.

Technologies Used:

React Native & JSX: For building the mobile interface and ensuring seamless user interactions.

Tailwind CSS (via NativeWind): For responsive, utility-first styling and a clean design.

Picovoice/Porcupine Library: For hot keyword detection to activate the voice recording feature.

Liberstate: To handle real-time translation of the transcribed text into various languages.

Key Features:

Hot keyword detection using Picovoice/Porcupine to initiate voice recording.

Real-time speech-to-text conversion.

Multi-language translation using Liberstate.

Integration with a sign language glove that triggers recording when opened.

Smooth and user-friendly interface, styled with Tailwind for clean and responsive design.

This app combines voice and sign language in a unique way, making communication more inclusive and accessible.