بناء نموذج Diffusion شرطي لتوليد الأرقام المكتوبة يدويًا باستخدام PyTorch

تفاصيل العمل

This project implements a Conditional Denoising Diffusion Probabilistic Model (DDPM) from scratch using PyTorch to generate handwritten digits from the MNIST dataset.

The model is based on a U-Net architecture enhanced with:

Residual blocks

Self-attention and linear attention layers

Sinusoidal time embeddings

Class conditioning with classifier-free guidance

Key features:

Custom beta scheduler (linear & exponential options)

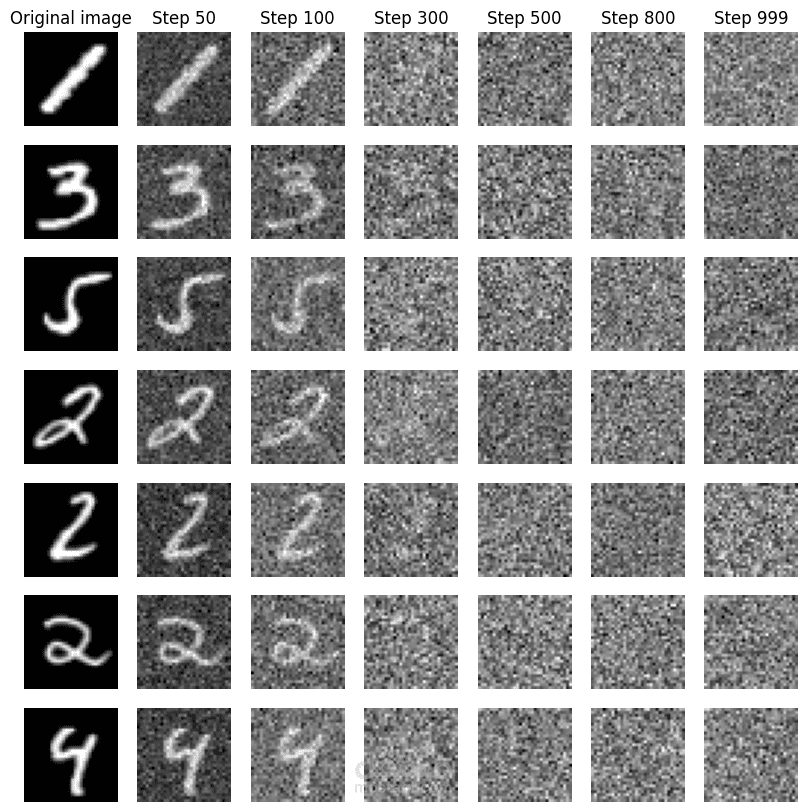

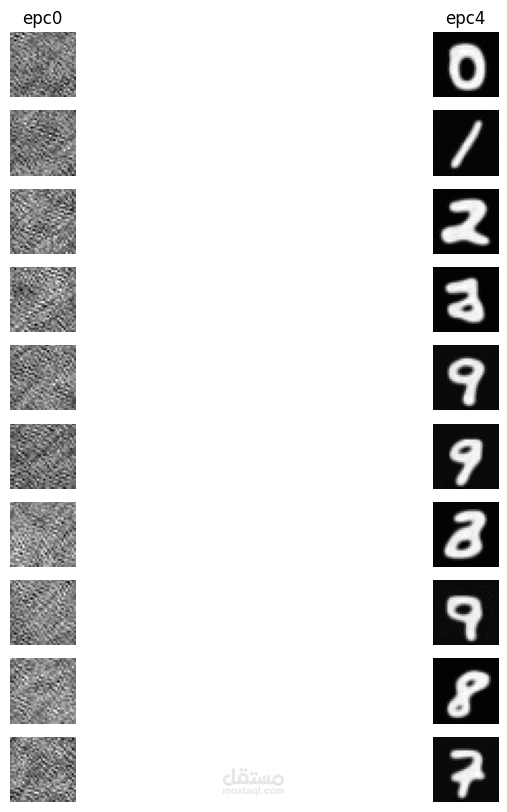

Forward diffusion process (noise addition)

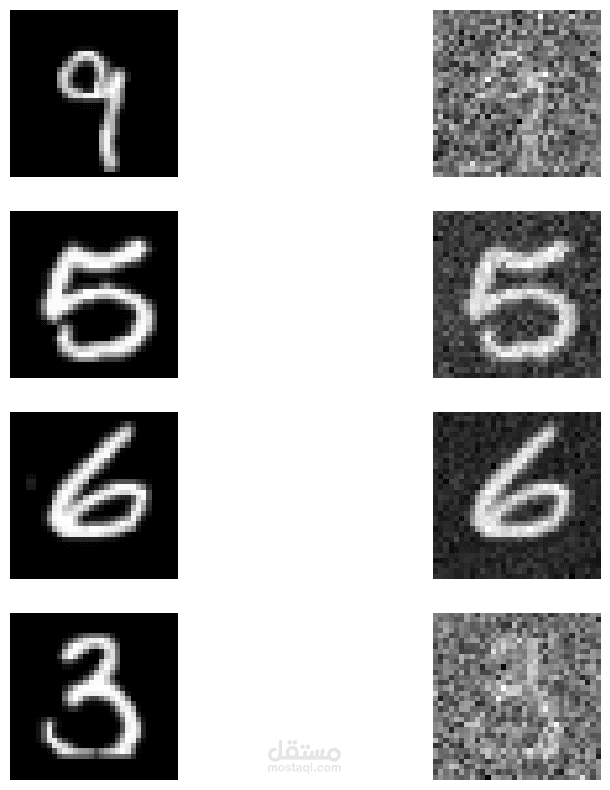

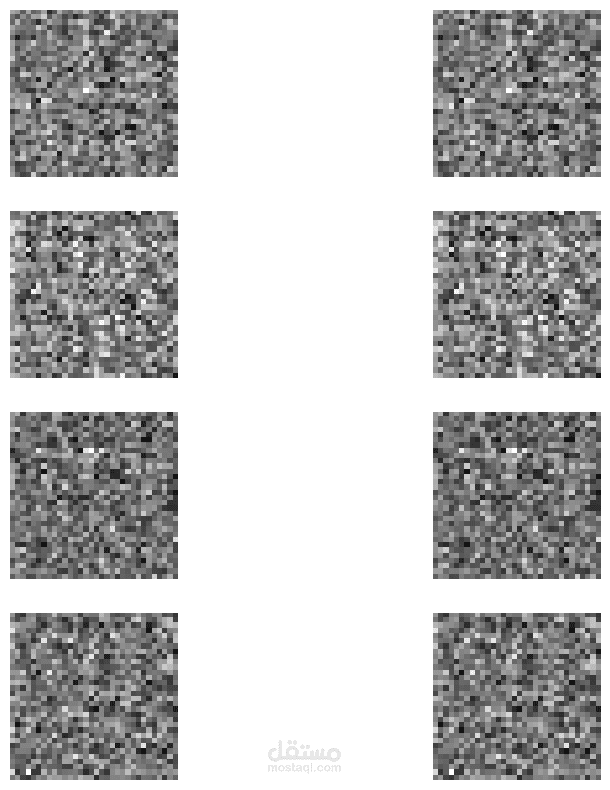

Reverse diffusion process (iterative denoising)

Class-conditional image generation (digits 0–9)

Progressive image generation visualization

GIF animation of the denoising process

The model was trained using MSE loss to predict added noise at arbitrary diffusion steps, enabling stable and controllable image generation.

Technologies used:

PyTorch

NumPy

Matplotlib

Einops

MoviePyاعمل