Building a High-Accuracy Deep Learning Model for Digit Recognition

تفاصيل العمل

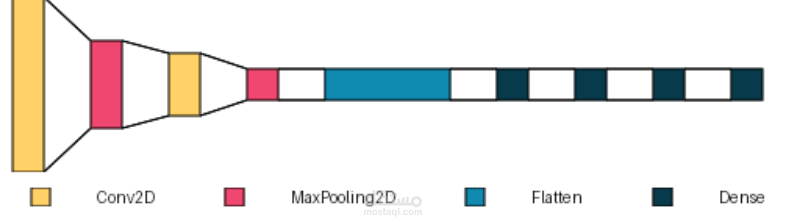

Implementing the LeNet-5 Architecture for High-Accuracy Handwritten Digit ClassificationThis project focuses on the implementation and training of a Convolutional Neural Network (CNN), specifically employing a variation of the historical LeNet-5 architecture, for the fundamental computer vision task of handwritten digit recognition. The model utilizes the widely accepted MNIST dataset, comprising $60,000$ training and $10,000$ test images of digits $0$ through $9$.Before training commenced, a crucial preprocessing pipeline was executed. The $28 \times 28$ input images were zero-padded to $32 \times 32$ pixels, aligning with the original LeNet-5 input specification, and subsequently normalized by scaling pixel values to the $[0, 1]$ range. Furthermore, the class labels were transformed using One-Hot Encoding to facilitate multi-class training using the categorical_crossentropy loss function.The sequential model architecture is characterized by an initial convolutional layer (C1) with 16 filters, followed by a MaxPooling2D layer (S1). This pattern is repeated with a second set of 32 convolutional filters (C2) and another pooling layer (S2). After extracting hierarchical features, the data is flattened and passed through a series of three fully connected (Dense) layers—$120$, $64$, and $32$ units—all utilizing the hyperbolic tangent (tanh) activation function, a characteristic feature of the classic LeNet model. The network culminates in a final Dense layer of 10 units with a softmax activation, providing the probability distribution across the ten possible digit classes.The model was compiled using the RMSprop optimizer and trained for 30 epochs with a batch size of 32. This configuration is designed to efficiently converge and achieve high classification accuracy, demonstrating proficiency in both data preparation and deep learning model implementation for solving core image classification challenges.