High-Performance CNN Model Development for CIFAR-10 Color Image Classification

تفاصيل العمل

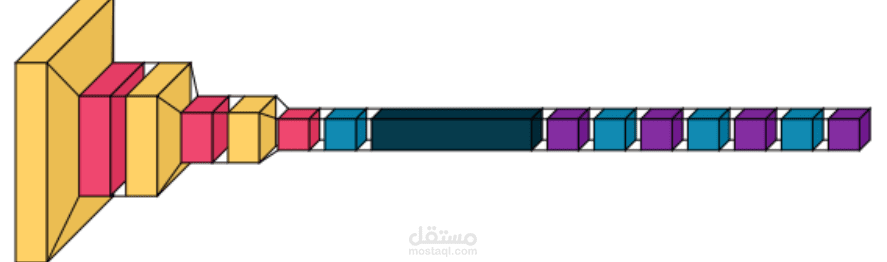

Development and Training of a Convolutional Neural Network for Complex Image Classification using CIFAR-10This project focuses on building and training a robust deep learning model capable of accurately classifying color images across ten distinct categories (including airplanes, automobiles, cats, and dogs) utilizing the benchmark CIFAR-10 dataset. The project is specifically designed to address the challenges of visual complexity and high variability within the dataset by employing a sophisticated Convolutional Neural Network (CNN) architecture optimized with Data Augmentation techniques.Data Preparation and PreprocessingThe CIFAR-10 dataset was loaded and partitioned into training and testing sets. Each image has dimensions of $32 \times 32$ pixels with three color channels (RGB). Two crucial preprocessing steps were applied to ensure stable and efficient training:Normalization: Pixel values in both the training and test data were divided by $255.0$ to scale them into the range $[0, 1]$.One-Hot Encoding: The target variables (the ten classification labels) were converted into the One-Hot Encoding format to align with the categorical_crossentropy loss function.Leveraging Data AugmentationTo combat data scarcity and mitigate Overfitting, an ImageDataGenerator was employed to produce modified versions of the training images during each epoch. The applied augmentation techniques included:Rotation: Up to $15$ degrees.Shifting: Horizontal and vertical shifts up to $10\%$ of the image width/height.Zoom and Shear: Varying the zoom range and shear intensity.Horizontal Flip: Mirroring the image horizontally.This augmentation process effectively increases the diversity and effective size of the training dataset, significantly improving the model's ability to generalize to unseen data.Building the Convolutional Neural Network (CNN) ArchitectureA sequential CNN model was constructed, designed with depth to abstract complex features from the color images. The architecture consists of three main convolutional blocks, each followed by a MaxPooling2D layer for dimensionality reduction. The ReLU activation function is used throughout the convolutional layers:Convolutional Blocks: Three consecutive convolutional layers using $2 \times 2$ filters (with padding='same').Dropout Layers: Dropout layers (with rates of $0.3$ and $0.2$) were strategically inserted after each convolutional phase and within the fully connected layers to reduce co-adaptation of neurons and prevent overfitting.Fully Connected Layers (Dense): After Flattening the feature maps, the output passes through three sequential Dense layers ($128$, $64$, and $32$ units), all utilizing the ReLU activation function.Output Layer: The final layer contains 10 units and uses the softmax activation function to provide the final classification probability for each class.Training and DeploymentThe model was compiled using the Adam optimizer and the categorical_crossentropy loss function, tracking Accuracy as the primary metric. The model was trained on the augmented data for 5 epochs, with the number of steps per epoch dynamically calculated. The trained model weights were saved (model.weight.best.hdf5) for later use in real-world prediction tasks. The project concludes with a functional deployment module that loads the trained model and processes new images for inference, demonstrating a complete end-to-end classification pipeline.