"Comparative Analysis of PCA and ICA for Feature Selection and Classification in Leukemia and Lung Datasets

تفاصيل العمل

Objective:

The report evaluates the performance of two dimensionality reduction techniques, PCA (Principal Component Analysis) and ICA (Independent Component Analysis), across various feature selection methods and machine learning models for two datasets: Leukemia and Lung.

Key Findings:

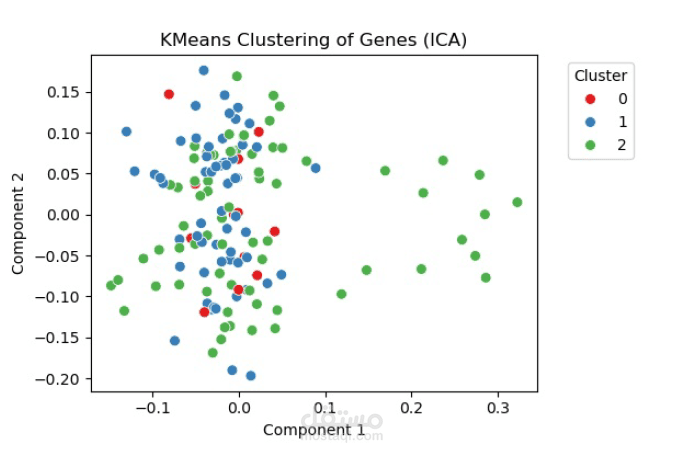

1. PCA vs ICA:

PCA consistently outperformed ICA in terms of cross-validation (CV) mean scores and test accuracy across most models.

ICA showed inconsistent and often degraded performance, especially for ensemble methods (e.g., Random Forest, Bagging) and tree-based classifiers.

2. Leukemia Dataset:

Best Models with PCA:

SVM and Bagging + SVM achieved the highest test accuracy (0.9672) across all feature selection methods.

Bagging + ANN also performed well, benefiting significantly from PCA.

Best Models with ICA:

ANN achieved the highest test accuracy (0.9545) with ANOVA + ICA, but CV scores were modest, indicating potential overfitting.

Tree-based models (Decision Tree, Random Forest) performed poorly with ICA, showing low test accuracy and poor generalization.

3. Lung Dataset:

Best Models with PCA:

SVM and Bagging + SVM consistently achieved the highest test accuracy (0.9672) across feature selection methods.

Bagging + ANN showed strong performance, especially with Backward Elimination + PCA.

Best Models with ICA:

ANN achieved the highest test accuracy (0.9508) with ANOVA + ICA, but other models struggled with ICA-based feature selection.

Decision Trees and Random Forests showed significant performance drops with ICA.

Feature Selection Techniques:

ANOVA + PCA: Delivered the best overall performance for most models, especially SVM and Bagging + SVM.

Mutual Information (MI) + PCA: Performance was consistent with ANOVA + PCA, with SVM and Bagging + SVM leading.

Forward Selection + PCA: Boosted ensemble models like Bagging + SVM and Bagging + ANN.

Backward Elimination + PCA: Provided the most consistent high test accuracy across models, particularly for ensemble approaches.

Conclusion:

PCA is the preferred dimensionality reduction technique for general classification tasks, offering better accuracy and robustness across models.

ICA may have niche applications but is less reliable for tasks requiring feature interaction, as it often leads to degraded performance.

SVM and Bagging + SVM consistently performed best across both datasets and feature selection methods, particularly with PCA.

Final Takeaway:

For classification tasks involving high-dimensional datasets, PCA paired with SVM or Bagging + SVM is the most effective approach. ICA is less suitable for these tasks but may be useful in scenarios requiring independent component extraction.