yolo object detection

تفاصيل العمل

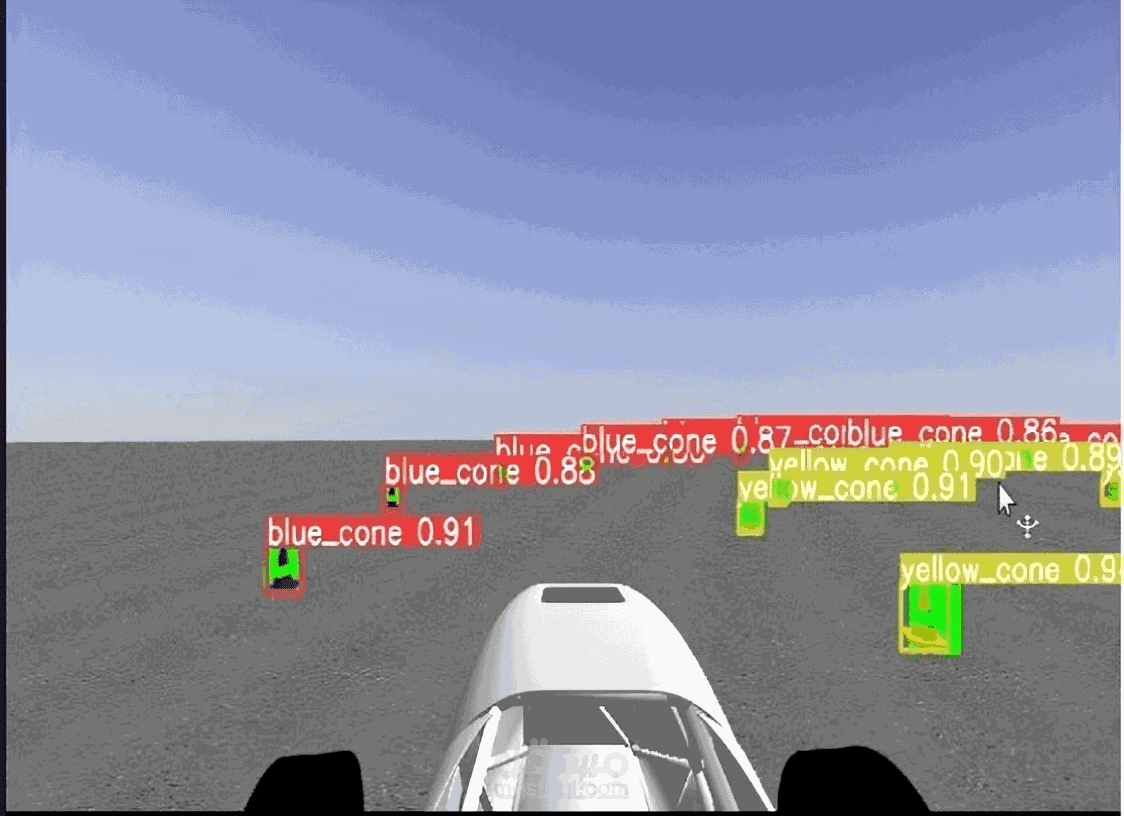

In the Formula Student autonomous project, I implemented YOLO (You Only Look Once) object detection to identify track cones in real time.

Objective: Enable the car to detect and classify traffic cones (usually yellow, blue, and orange) that define the race track.

Method:

Collected and labeled a custom dataset of cone images under varying conditions (lighting, angles, backgrounds).

Trained a YOLOv8-s model for real-time cone detection with high accuracy.

Optimized inference for low-latency performance, ensuring the model could run at real-time speeds on embedded hardware.

Impact: The cone detection system became a critical component for the car’s perception pipeline, feeding cone positions into the localization and path-planning algorithms. This allowed the car to understand the track boundaries and drive autonomously.