Smart Glasses Assistive System for the Visually Impaired

تفاصيل العمل

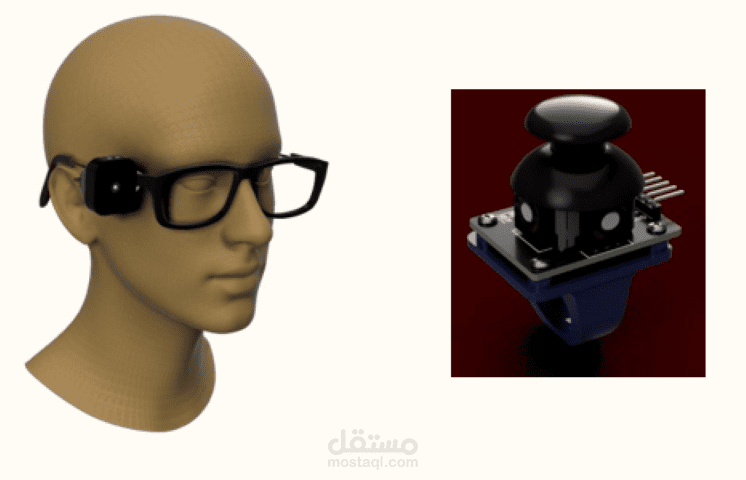

Developed a multi-functional AI-powered wearable system using Raspberry Pi 4 and computer vision, designed

to assist blind and visually impaired individuals in daily life.

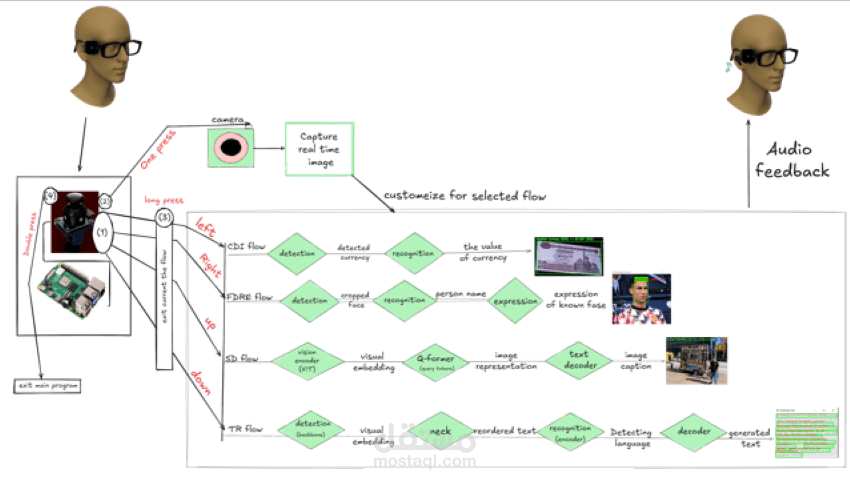

Challenges Addressed: Navigating unfamiliar environments, identifying currency, recognizing faces,

interpreting emotions, and reading English text in real time.

Project 1

Features & Solutions:

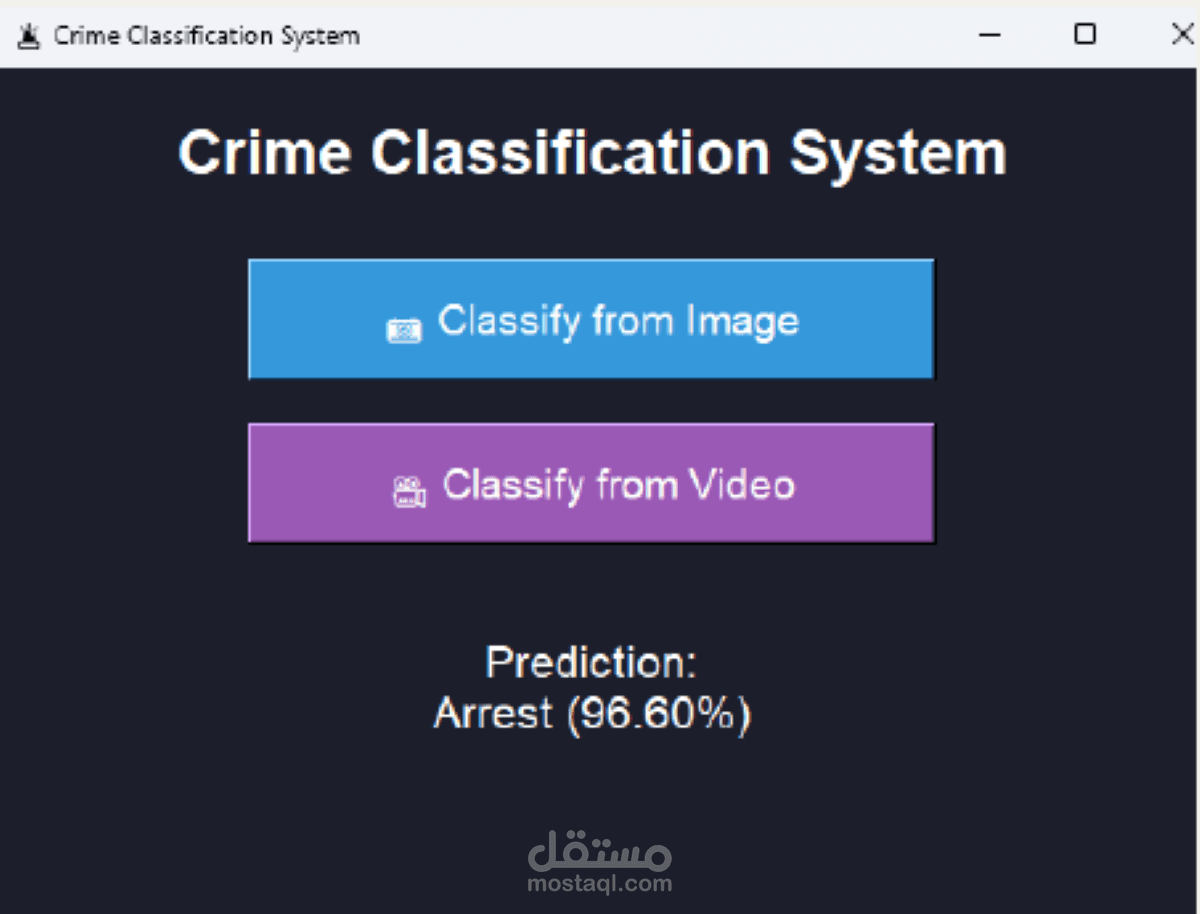

Currency Recognition: YOLOv8 and CNN models to accurately identify Egyptian banknotes.

Face Detection & Emotion Analysis: MobileNet and Mediapipe analyze faces in real time to provide social

context.

Scene Description & Text Reading: BLIP Transformer APIs generate spoken descriptions of surroundings and

read English text aloud.

User Interaction: Joystick-based navigation for easy control, with audio feedback guiding the user.

Technologies: Python, OpenCV, pretrained models, TFLite, custom APIs, Raspberry Pi 4, audio systems.