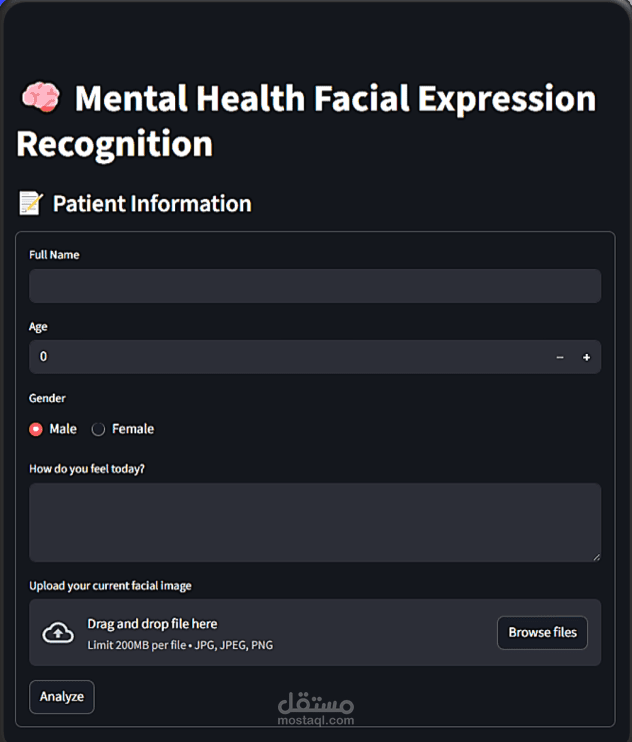

Mental Health Facial Expression Recognition

تفاصيل العمل

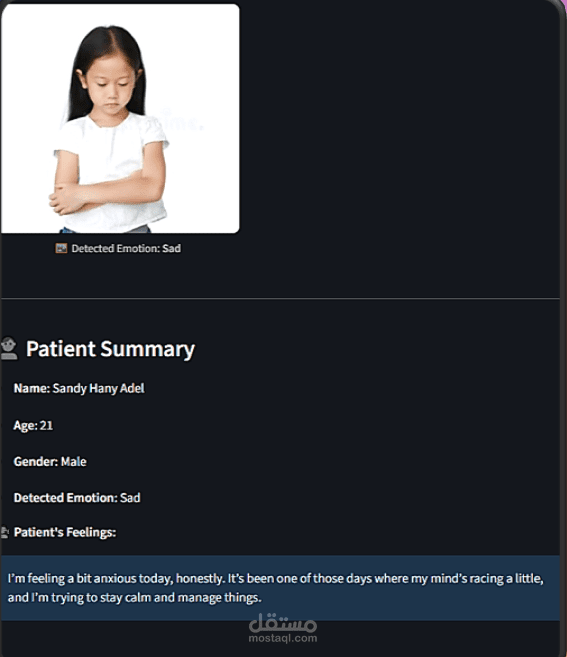

I developed a Facial Expression Recognition System using Convolutional Neural Networks (CNNs) to support mental health clinics in assessing patients’ emotional states. The application includes a user-friendly GUI where patients can upload a picture of their face to automatically detect their current emotion, fill in personal details (name, age, gender), and freely express their feelings in a large text field. The system then generates a structured Patient Report that combines AI-detected emotions with self-reported inputs, giving clinicians deeper insights. This solution helps in the early detection of depression, anxiety, and emotional distress, flags patients who show prolonged sadness or fear, and provides valuable support for children, elderly, or trauma survivors who may struggle to express emotions verbally. Built with TensorFlow, Keras, and OpenCV, the project demonstrates how AI-powered healthcare applications can bridge the gap between patients and clinicians, enabling early intervention, better communication, and improved mental health care.