AI-Powered Audio Chatbot API for Language Learning with Pronunciation Feedback Using Google Gemini

تفاصيل العمل

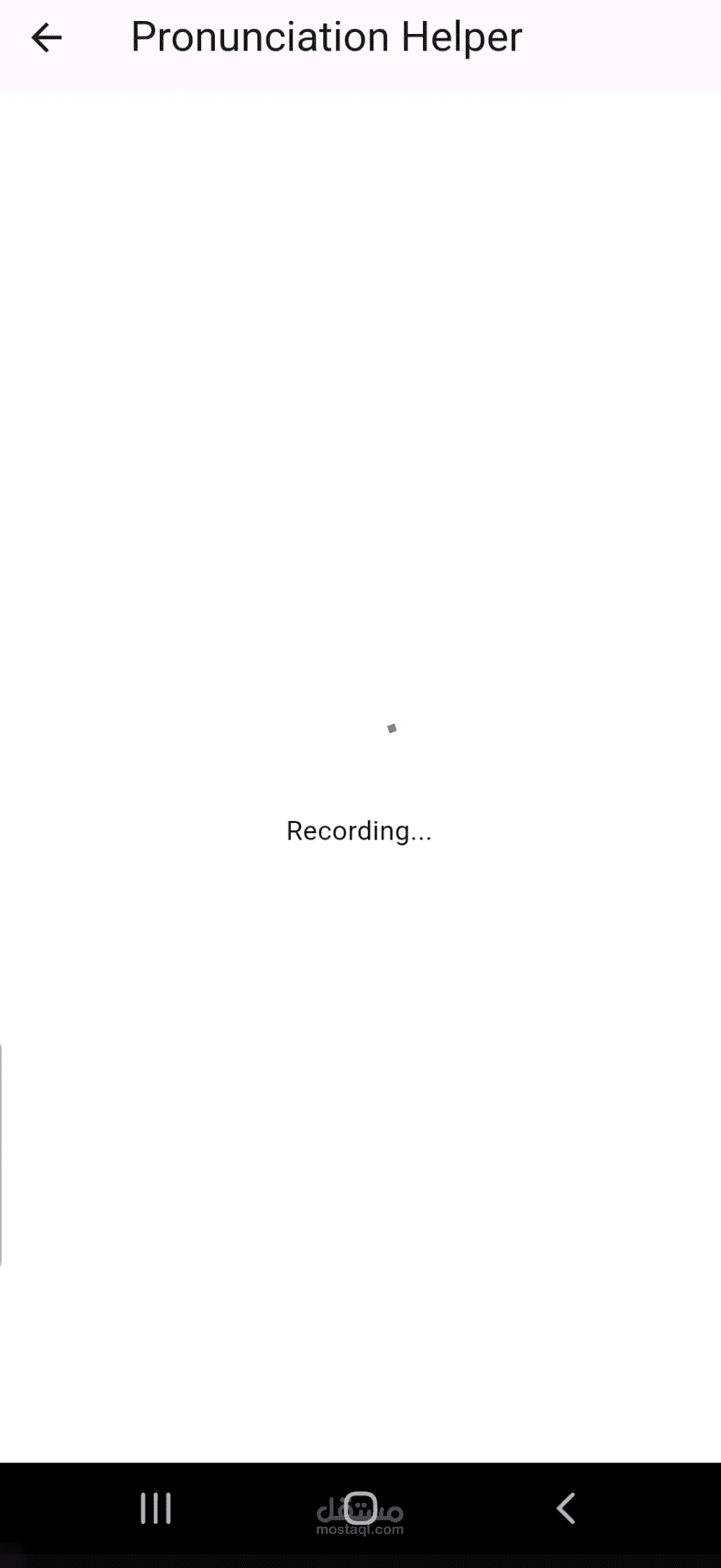

This project provides a backend audio chatbot API designed for integration into a language learning application. The system allows users to have spoken conversations with an AI-powered assistant that not only responds in real time but also offers feedback on pronunciation and speaking quality.

Key components and capabilities:

Audio Upload & Processing

Users send recorded audio files (speech samples) via a simple HTTP POST request.

Incoming audio is converted to 16-bit PCM WAV format at 16kHz using ffmpeg for compatibility with Google Gemini.

Integration with Google Gemini 2.5 Audio Dialog Model

A live session is established with the Gemini model to enable real-time, two-way spoken interaction.

The user's audio is streamed into Gemini, and the model responds with synthesized audio output.

Language Learning Feedback

The system is configured to act as a helpful pronunciation coach, providing guidance on:

Pronunciation clarity

Accent issues

Word stress and rhythm

Fluency improvement tips

It responds with audio so users can hear both corrections and model pronunciations.

Audio Response Playback

Gemini’s audio response is converted back into a .wav file and returned to the client for playback.

Great for apps where learners listen to correct pronunciation, then practice again.

Efficient Resource Handling

Temporary audio files are created and automatically cleaned up after processing.

Built-in logging helps with debugging and development.

Cross-Platform & CORS Enabled

The server uses CORS to support requests from mobile or web clients.

Hosted on 0.0.0.0 to allow access across devices, perfect for mobile app development.

This backend can be plugged into a Flutter, React Native, or web-based frontend, enabling interactive voice practice and real-time feedback — ideal for language learners who want to improve speaking skills through guided conversations.