Eyeconic (Ar Glasses)

تفاصيل العمل

Eyeconic is an advanced AR glasses solution powered by a Raspberry Pi 4 Model B (8GB RAM), offering both offline and online intelligent assistance modes to support real-time translation and smart interactions.

Key Features

Offline Mode

Provides translation capabilities through:

Speech recognition, using lightweight models

OCR-based image translation, enabling users to translate text from captured images

Operates completely without internet connectivity for maximum portability and privacy.

Online Mode

Enables two types of intelligent interactions:

Live Agent Communication: Users can speak directly to a remote assistant for real-time support.

LLM-Powered Chat: Users can interact with a custom LLM via text or voice prompts.

Backend powered by a Django server with REST APIs, interfacing with OpenRouter and Whisper Large v3 for advanced transcription and natural language processing.

Users can:

Record voice prompts or capture images

Send requests to the server and receive intelligent responses

Benefit from fast, reliable AI interaction, even in dynamic environments

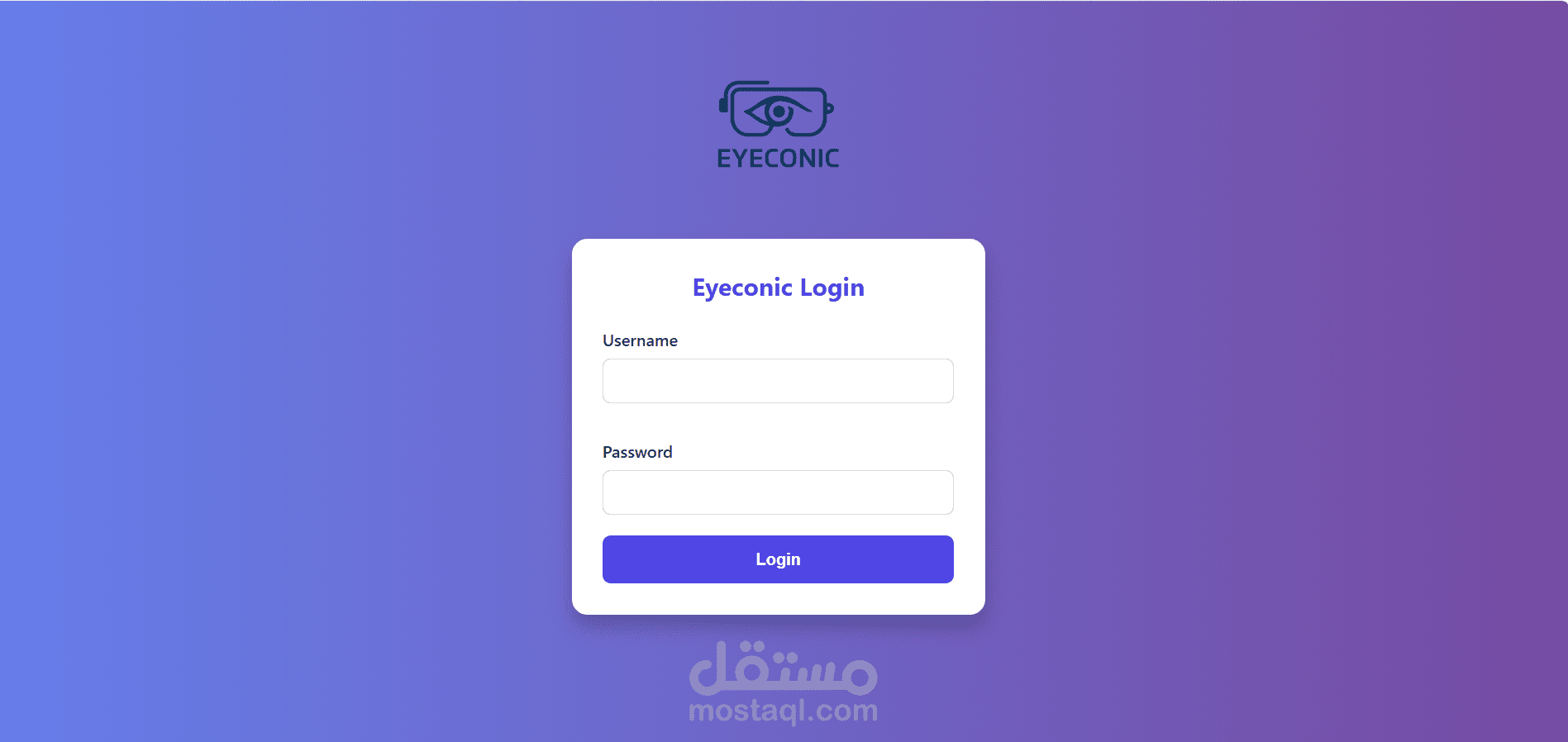

Infrastructure & Access Management

One of the major challenges was managing user login and Wi-Fi configuration without traditional input devices (no keyboard or mouse). To solve this:

We built a Flask-based local web portal hosted directly on the Raspberry Pi.

When the device boots, it activates an Access Point (AP) mode.

Users connect to the Pi’s Wi-Fi and access a local webpage to enter:

Wi-Fi credentials

Username and password

This information is securely saved in a JSON file and used by the AR glasses to initialize autonomous operation.

This ensures that Eyeconic becomes a standalone product, requiring no external setup once configured.

Mobile Companion App

We also developed a Flutter-based mobile app that provides:

User registration and authentication

Chat history management

Seamless control of the device experience