driver monitoring system

تفاصيل العمل

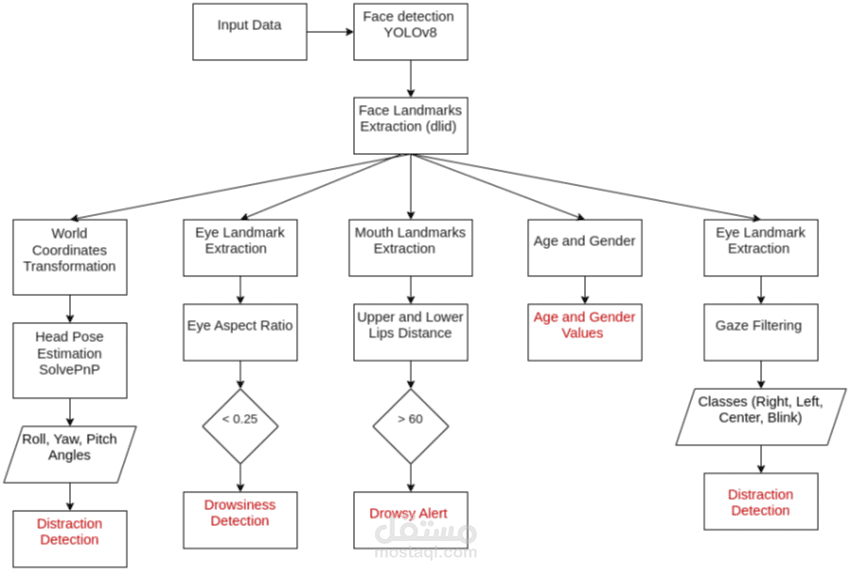

The goal of this system is to detect signs of drowsiness, distraction, or other risky behaviors that could impair driving

performance. By utilizing computer vision techniques, such as face detection, eye tracking, head pose estimation, and

behavior recognition, DMS helps in ensuring that the driver is fully alert and focused on the road. The system works by

using cameras or sensors to capture the driver’s face and behavior. This data is then processed using machine learning

models to detect specific behaviors, such as facial expressions, head movements, eye states, and body posture, which are

indicative of the driver’s state of alertness. Based on this data, the system can provide real-time feedback, such as

warnings or alerts if it detects signs of drowsiness or distraction. The project started with using YOLOv8,for face

detection then move to Face Landmark Detection for Feature Extraction using DLIP Library and 68-Point Face

Landmark Model To extract facial features for head pose estimation and behavior monitoring These models detect key

facial landmarks such as the eyes, nose, mouth, and jawline, which are crucial for tracking driver behavior and make

another model that detect distractions identifying behaviors like eating, drinking, or using a phone. The model classifies

these activities into different categories to assess if the driver is engaged in any dangerous behavior that could impair

driving