Q&A in hadith by Rag (nlp)

تفاصيل العمل

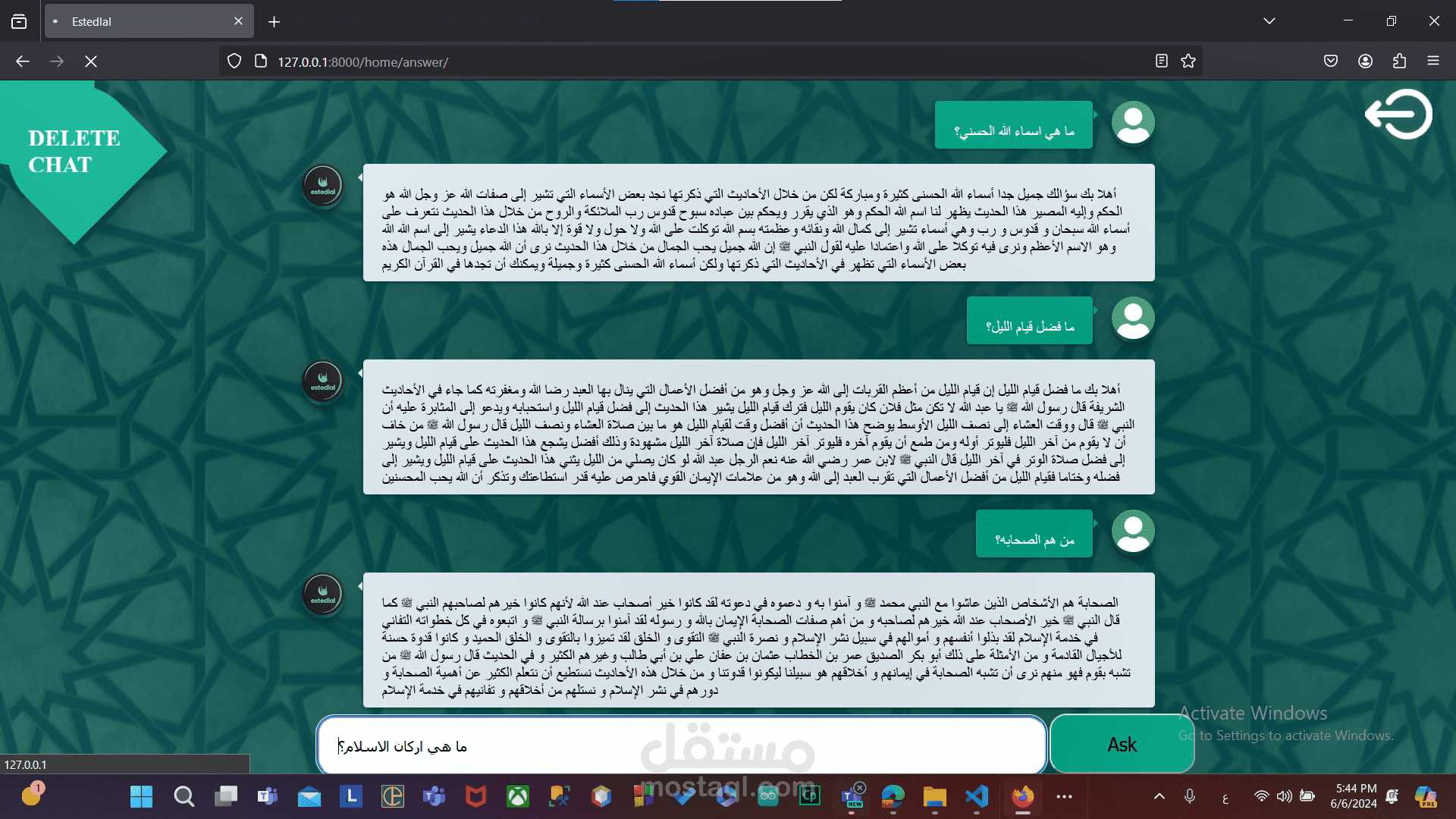

The project focuses on improving the responses generated by the Gemini AI API in religious discussions and question-answering scenarios. This was achieved through the implementation of Prompt Engineering techniques using the RAG (Retrieval-Augmented Generation) Model.

Details:

Objective:

Enhance the accuracy and relevance of AI-generated responses in the domain of religious conversations.

Provide users with reliable and contextually appropriate answers.

Key Components:

RAG Model Integration:

The RAG model was utilized to retrieve relevant context and improve the response generation from the Gemini AI API.

Classification Model:

A separate machine learning model was developed to classify religious texts, such as authenticating Hadiths (e.g., strong or weak).

Frontend Development:

Designed a user-friendly interface for the application.

The frontend was implemented using standard tools to ensure a seamless user experience.

Backend Development:

Built using Django, the backend handles the application's logic and integrates the AI models.

Deployment:

The application was deployed using the Gradio library to provide an interactive and accessible platform for users.

Technologies Used:

Frontend: Custom web interface for user interaction.

Backend: Django framework for robust backend architecture.

AI Models: RAG for prompt engineering and classification model for text categorization.

Gradio: For deployment and interactive user testing.

Outcomes:

Improved response accuracy for religious Q&A.

Successfully deployed a scalable and efficient application combining state-of-the-art AI techniques.

Enabled reliable classification of religious texts to assist users in better understanding Hadiths.