Multimodal-Translation-Project

تفاصيل العمل

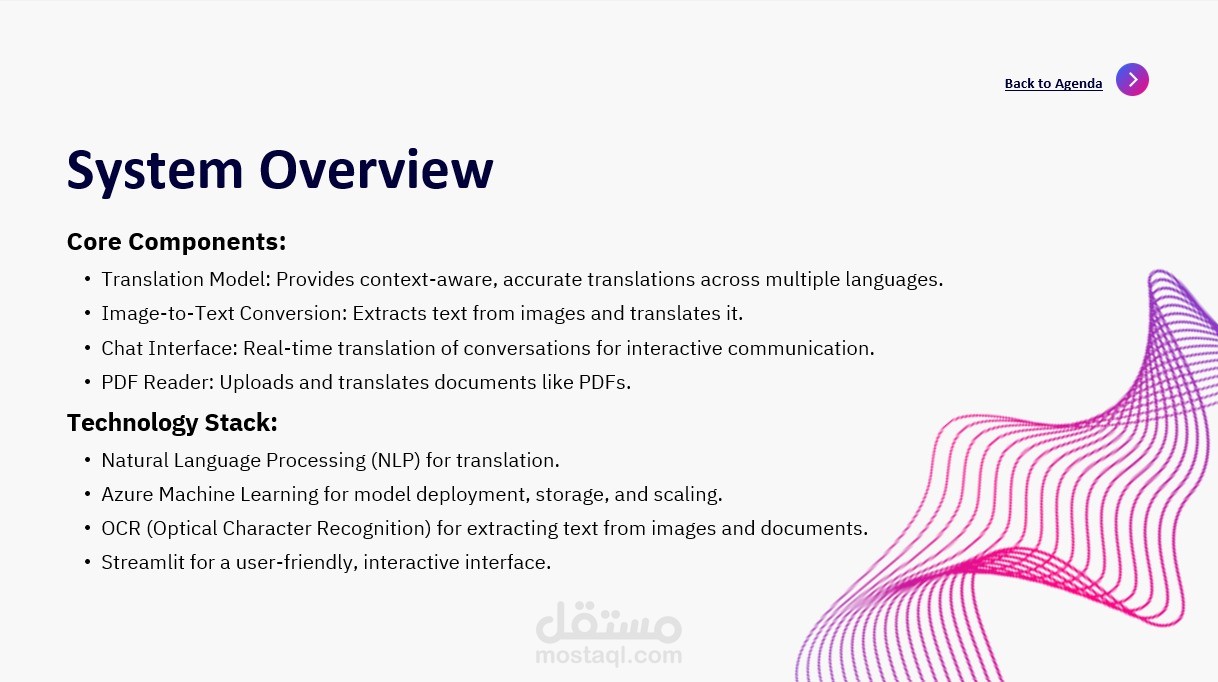

The Multimodal Translation Project is an innovative project focused on enhancing translation capabilities by leveraging multimodal data—such as text, images, and other sensory inputs—to improve translation accuracy and context comprehension. This project is particularly useful in real-world applications where understanding multiple data types can lead to more nuanced and accurate translations. The project integrates advanced machine learning models that process various input formats, making it suitable for diverse fields like language learning, accessible content creation, and cross-cultural communication.

Through a combination of natural language processing (NLP) and computer vision techniques, the project aims to tackle challenges related to context in translation, especially when certain meanings may only be clear through visual or situational cues. Additionally, the repository includes detailed documentation, making it accessible for developers and researchers interested in furthering the capabilities of multimodal systems for translation. This project is part of a course by DEPI, illustrating Omar’s dedication to advancing practical machine learning applications in multilingual and multimodal data environments.