Facial emotion Recognition

تفاصيل العمل

Overview

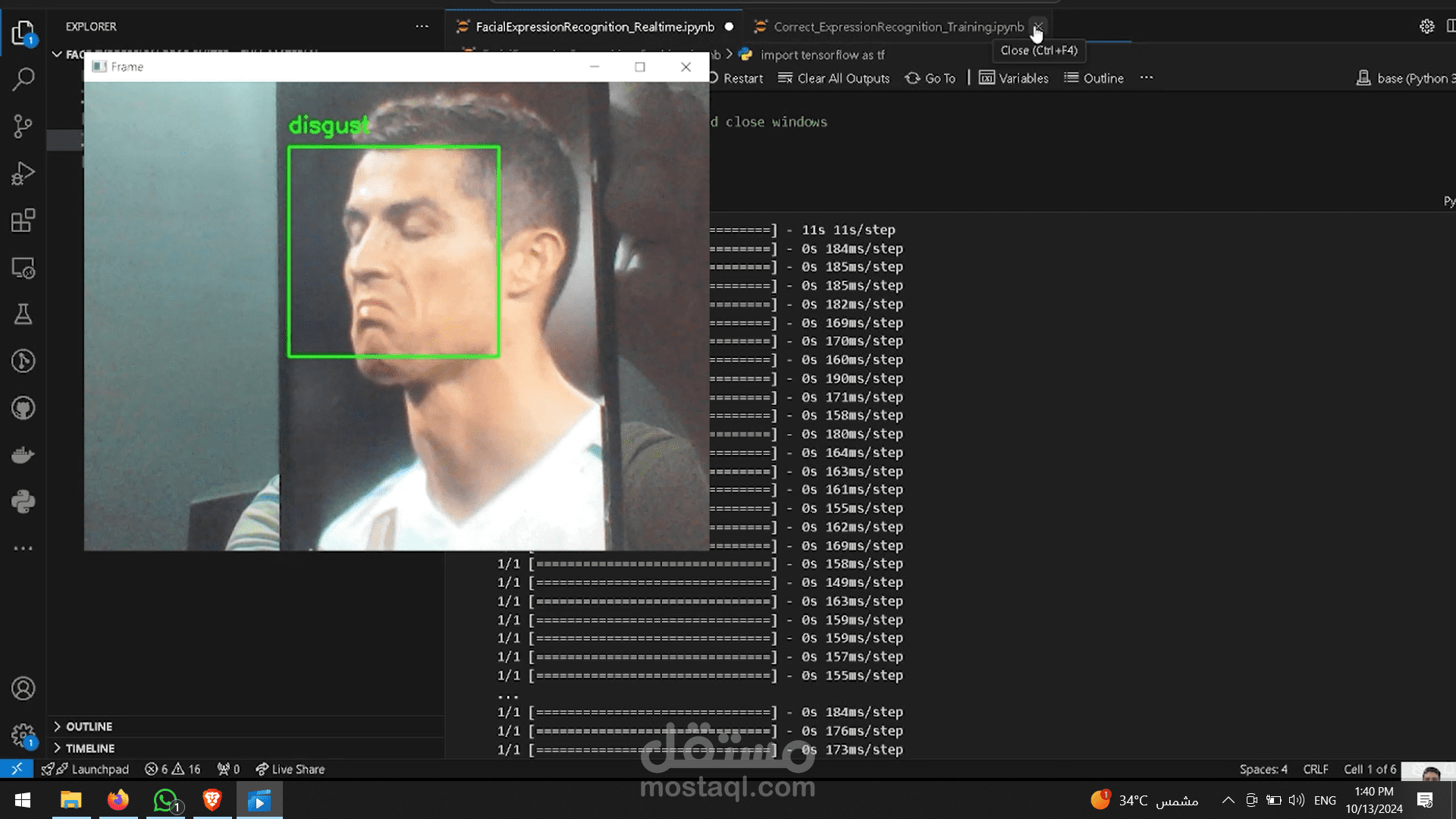

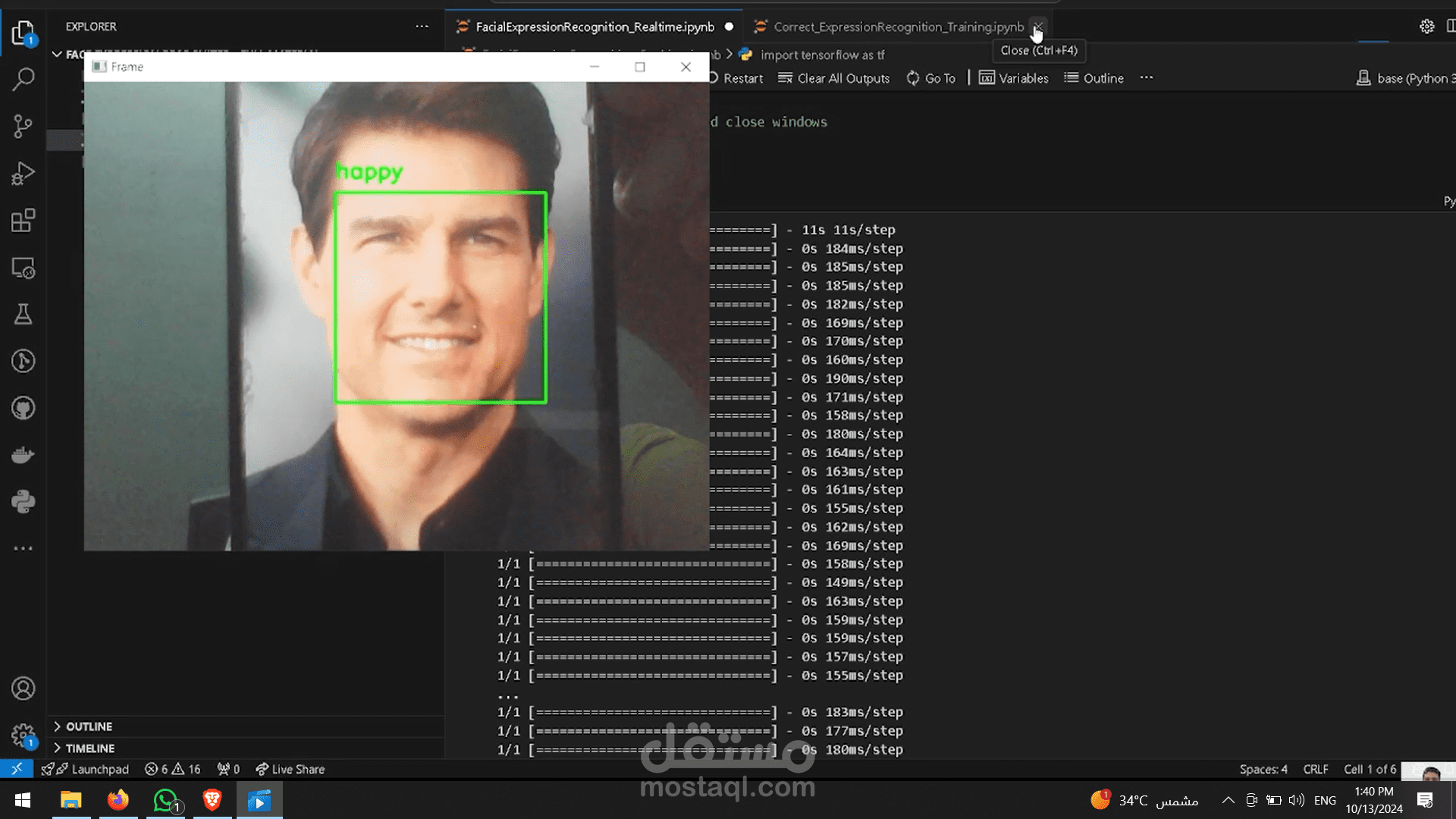

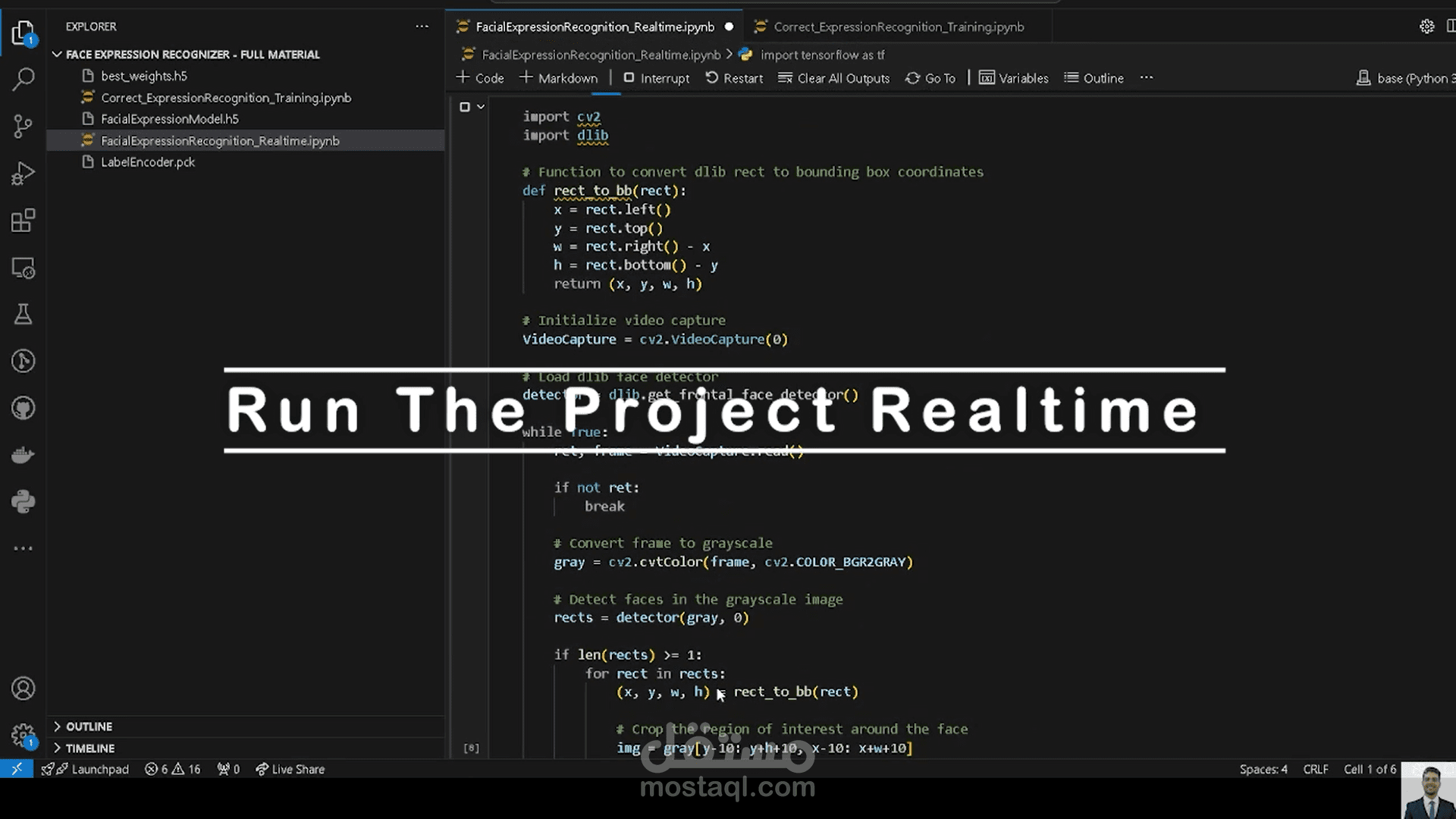

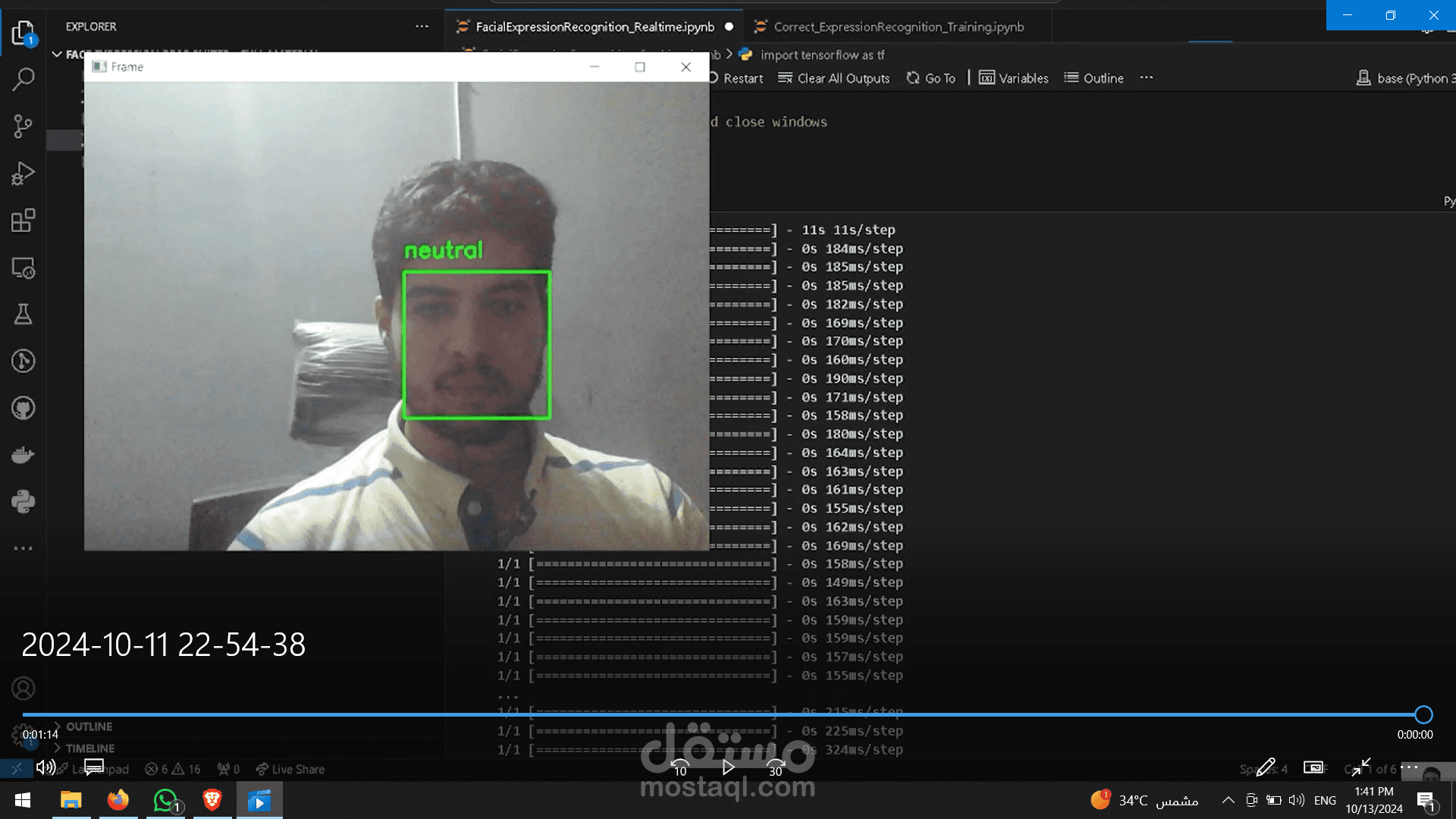

This project aims to classify facial expressions using a Convolutional Neural Network (CNN). The model is trained on a dataset of facial images and can predict emotions such as happiness, sadness, anger, etc.

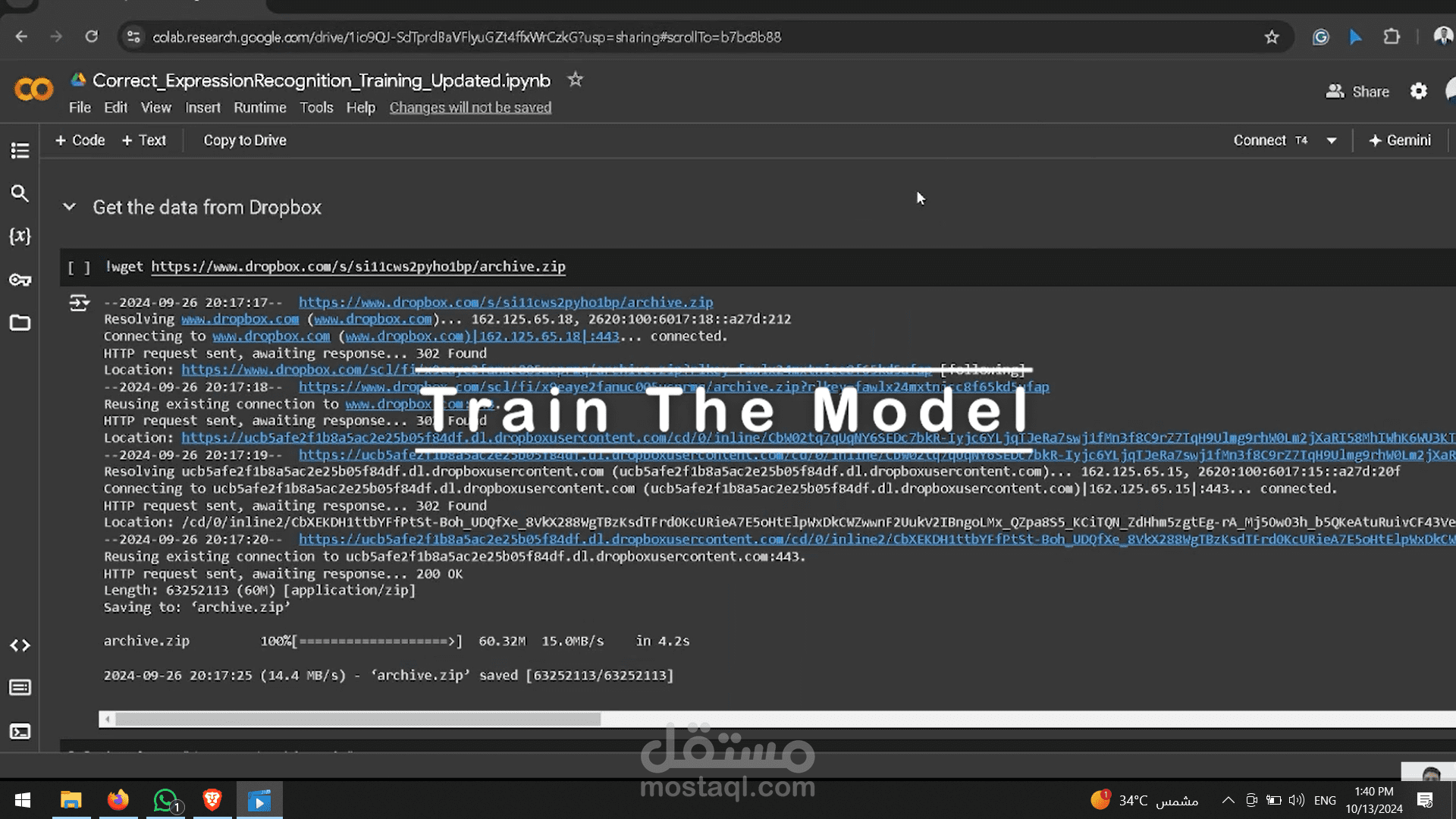

Dataset

The dataset contains images categorized into 7 different expressions. Each image is preprocessed and resized to 150x150 pixels before being fed into the model.

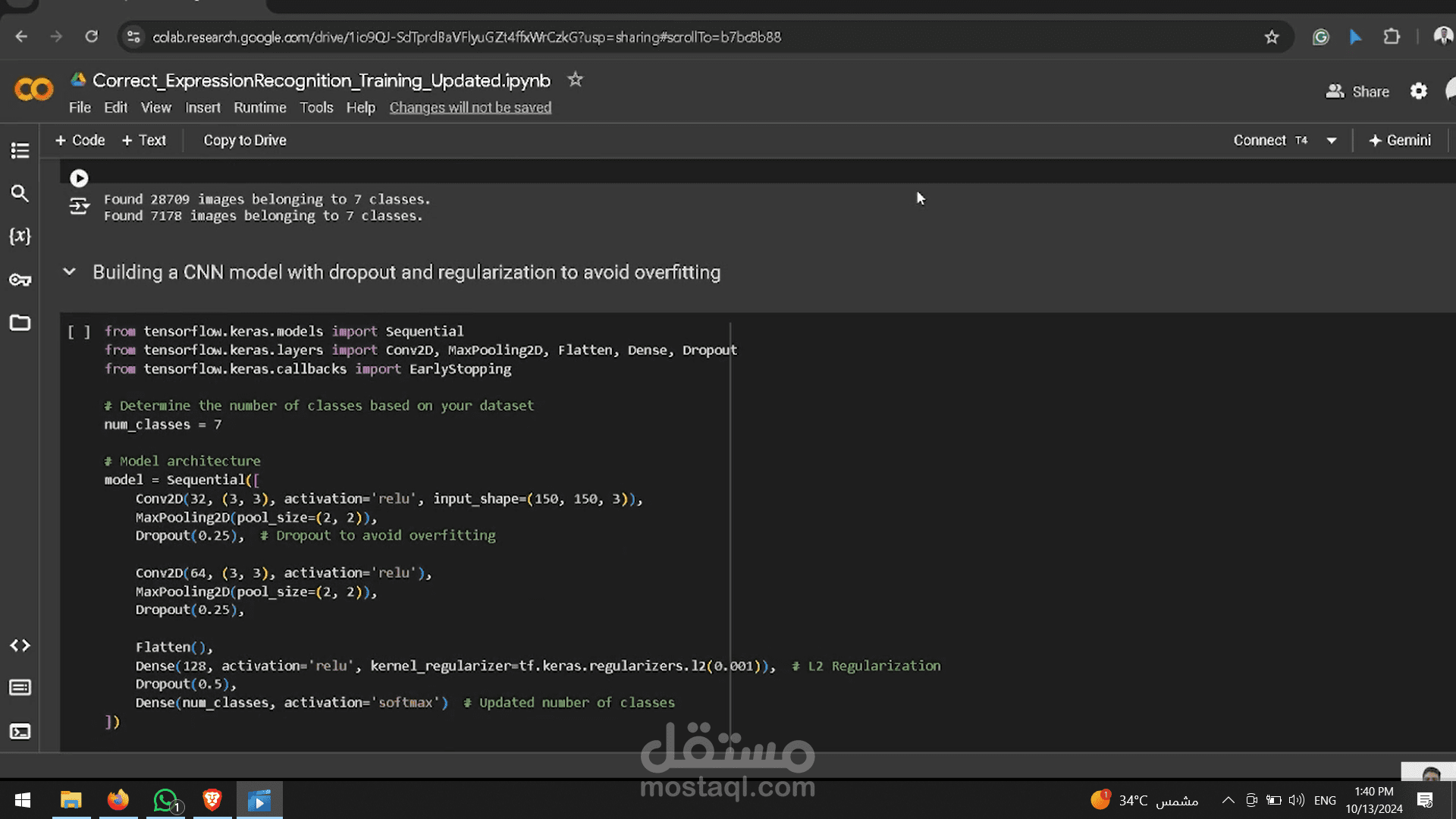

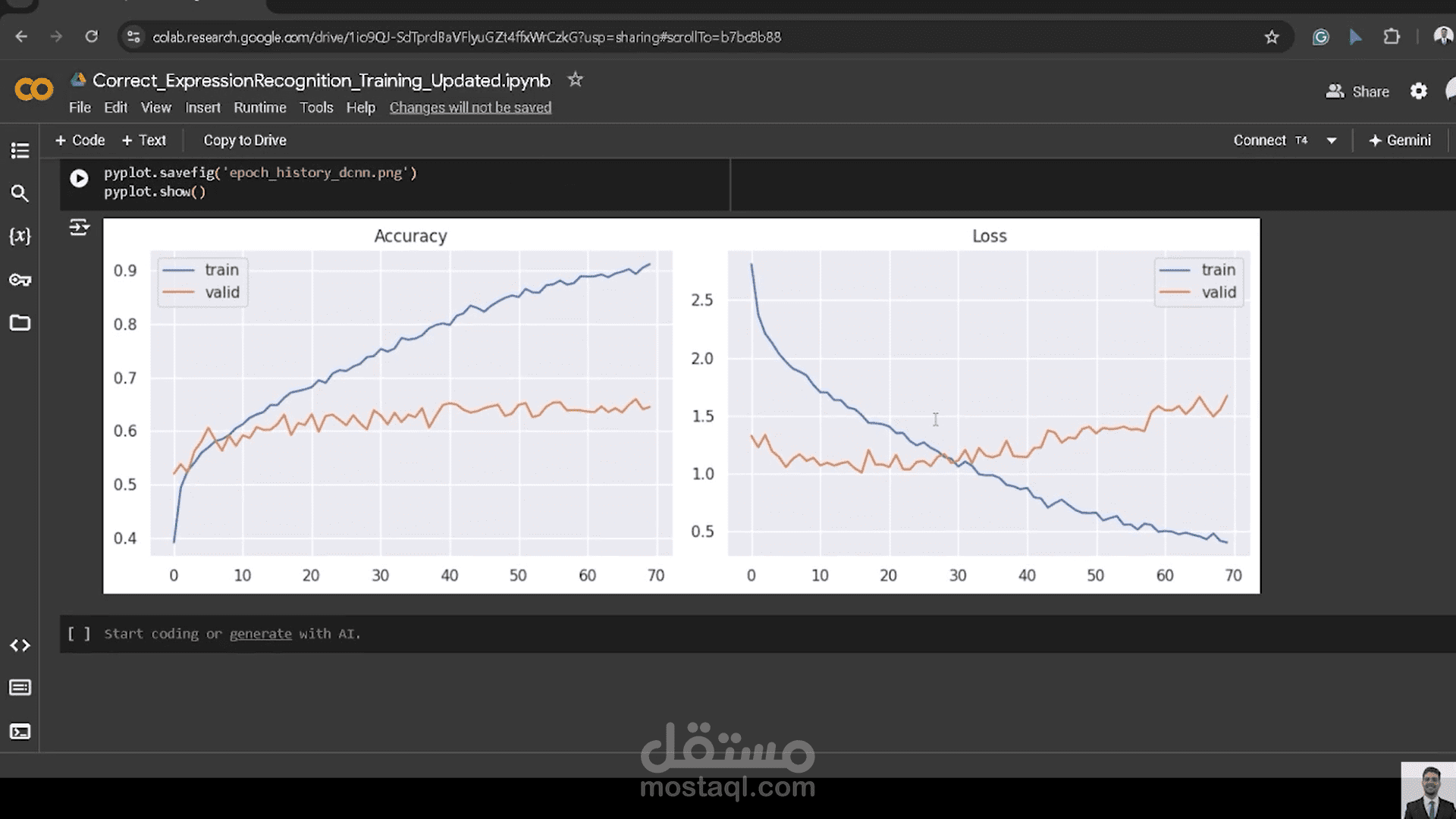

Model Architecture

The CNN model is composed of several layers:

Convolutional Layers: Extract features from the image using filters.

Pooling Layers: Reduce the dimensionality of the feature maps.

Dropout Layers: Prevent overfitting by randomly setting a fraction of input units to 0.

Fully Connected Layers: Perform classification based on extracted features.

Detailed Architecture:

Conv2D Layer: 32 filters, kernel size (3, 3), activation 'ReLU'.

MaxPooling2D Layer: Pool size (2, 2).

Dropout Layer: 25% dropout rate.

Conv2D Layer: 64 filters, kernel size (3, 3), activation 'ReLU'.

MaxPooling2D Layer: Pool size (2, 2).

Dropout Layer: 25% dropout rate.

Flatten Layer: Flattens the 2D matrix into a 1D vector.

Dense Layer: 128 units, activation 'ReLU', L2 regularization.

Dropout Layer: 50% dropout rate.

Output Layer: Softmax activation with 7 units for 7 classes.

How CNNs Work

Convolutional Neural Networks (CNNs) are deep learning models designed to process structured grid data, such as images. CNNs use convolutional layers that apply a set of filters to the input image, extracting features like edges, textures, and patterns.

Image Processing:

Rescaling: Images are rescaled to values between 0 and 1.

Data Augmentation: Techniques like rotation, zoom, and flip are applied to increase data diversity and prevent overfitting.

Normalization: Input images are standardized to ensure consistent input distribution.

بطاقة العمل

| اسم المستقل | مصطفى ر. |

| عدد الإعجابات | 0 |

| عدد المشاهدات | 10 |

| تاريخ الإضافة |