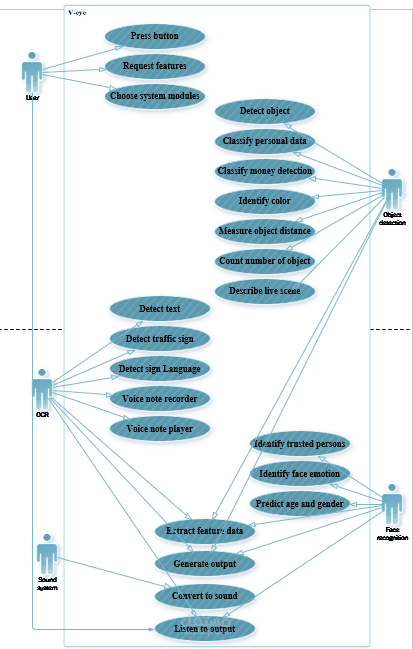

v-eye system analysis

تفاصيل العمل

I used the Visio program to explain various UML models that illustrate the overall concept of the V-Eye project, including use case and activity diagrams.

Then I explained each feature separately using the use case diagram once and the activity diagram another time.

In the use case diagram, I described the system's operation, starting from when the button is pressed and ending with the audio output generated for visually impaired users.

One feature is object detection, which begins by accessing a pre-trained model dataset containing 80 classes. After taking a single shot with the camera, the model is applied to the image to identify the type of object, and the result is provided as an audio output for the blind.

The second feature is OCR, which captures a single image, scans the entire text, identifies each letter individually, and then outputs the information in audio format for the blind.