Data Pipeline with Airflow

تفاصيل العمل

Project Overview

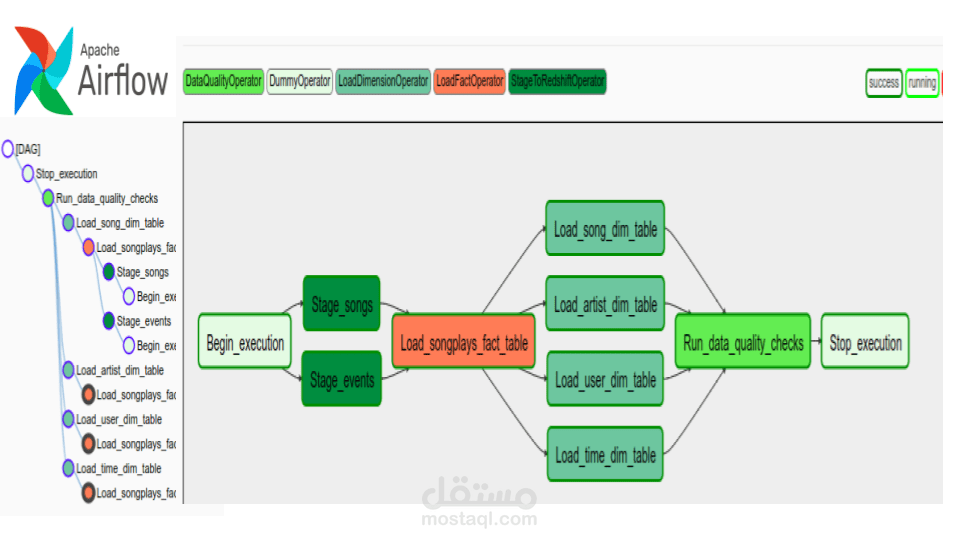

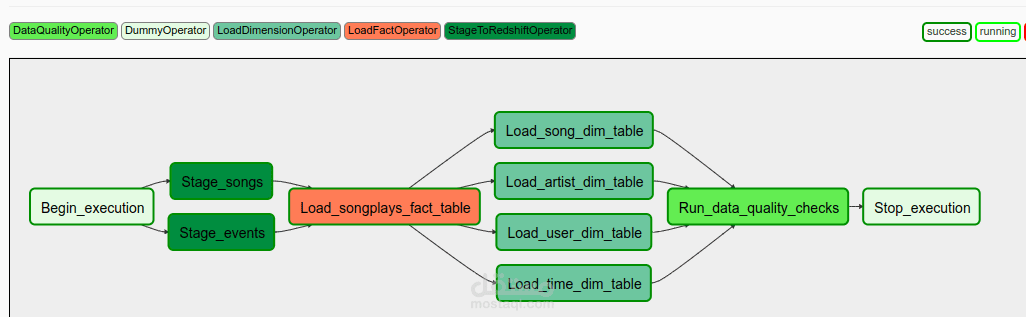

This project focuses on automating and monitoring the ETL pipeline for a music streaming data warehouse on Amazon Redshift.

Implementation Stages

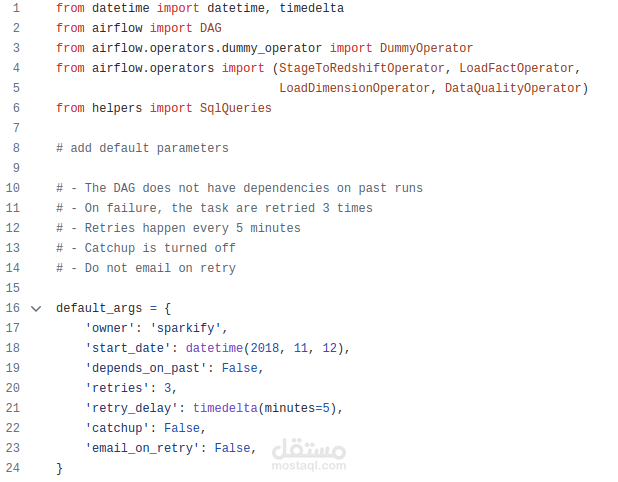

1. Airflow DAG Development:

Directed Acyclic Graphs (DAGs) were created in Python to define the ETL workflow within Airflow.

create_tables_dag.py: This DAG utilizes Python scripts to create the necessary tables in the Redshift data warehouse based on a predefined schema.

process_data_dag.py: This DAG orchestrates the entire ETL process, including:

Staging data: Extracting JSON files from S3 buckets into temporary staging tables within Redshift.

Data transformation: Performing any necessary transformations on the staged data to ensure compatibility with the target tables.

Data loading: Loading the transformed data into the appropriate fact and dimension tables of the data warehouse.

Data quality checks: Running defined data quality tests to detect any inconsistencies or errors in the loaded data.

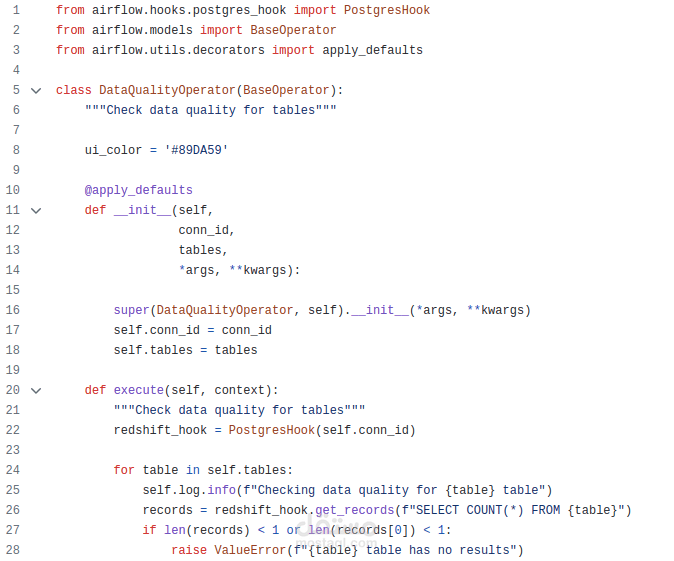

2. Custom Airflow Operators:

Python operators were implemented within the Airflow plugins directory to handle specific tasks within the ETL pipeline:

create_table.py: This operator creates tables in Redshift based on SQL statements specified in the `create_tables.sql` file.

stage_redshift.py: This operator facilitates the loading of JSON data files from S3 into designated staging tables within Redshift.

load_fact.py and load_dimension.py: These operators handle loading data into fact and dimension tables respectively, taking SQL statements, target database, and target table names as input.

data_quality.py: This operator executes data quality checks on the loaded data, running predefined validations defined in the `sql_queries.py` file.

3. Data Quality and Monitoring:

The `data_quality.py` operator performs checks on the loaded data to ensure accuracy and consistency. These checks can be customized based on specific data quality requirements.

Airflow provides built-in monitoring capabilities to visualize the execution status of the DAGs and individual tasks within the ETL pipeline. This allows for identifying any errors or delays in the process and facilitating troubleshooting.

Final Outcome

This project establishes an automated and monitored ETL pipeline for the music streaming data warehouse. Apache Airflow orchestrates the data movement process from S3 to Redshift, utilizing custom operators to perform specific tasks. Data quality checks are implemented to ensure data integrity throughout the pipeline. Real-time monitoring capabilities within Airflow provide insights into the overall workflow execution and facilitate proactive issue identification.

This automated and monitored ETL pipeline enhances the efficiency and reliability of data transfer from S3 to Redshift. Data quality checks minimize the risk of errors in the data warehouse, and Airflow's monitoring capabilities provide valuable insights for streamlining data operations.